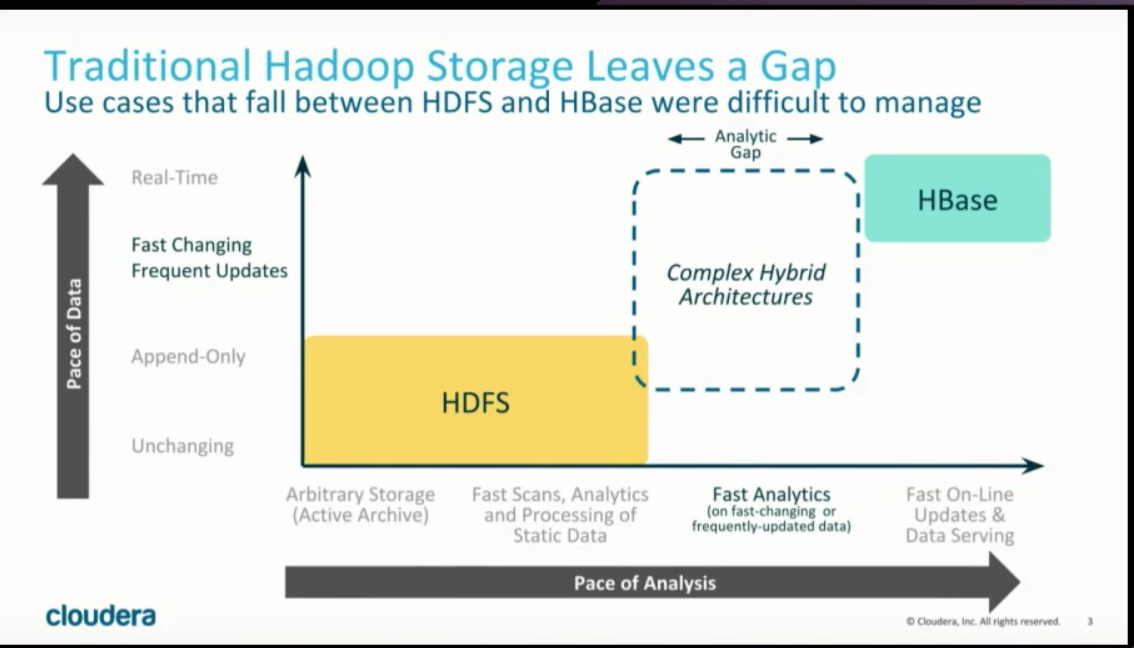

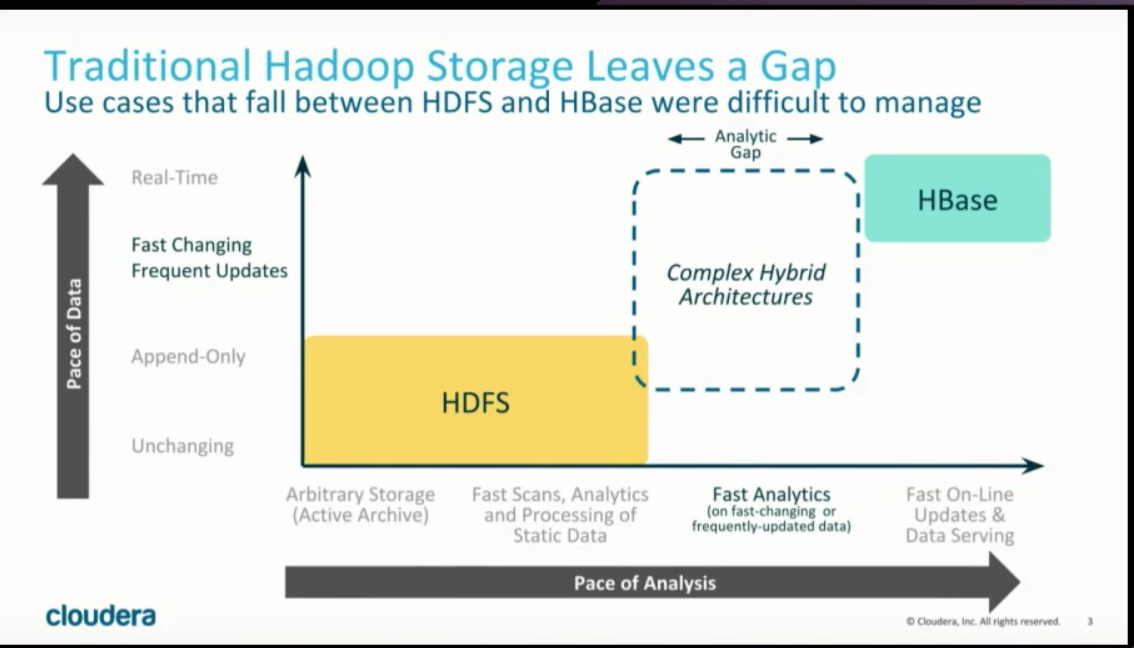

Gap

HDFS

- Good for Append-only data storage

- Combined with Apache Parquet (Columnar storage format in Hadoop ecosystem) can provide a good analytics performance

Apache Parquet

- Takes advantage of compressed, efficient columnar data represention

- Uses record shredding and assembly algorithm as mentioned in the Dremel paper

- Supports very efficient compression and encoding schemes

- Allows compression schemes to be specified on a per-column level

- Future-proofed to allow adding more encodings as they are invented and implemented

Apache Spark and Apache Parquet

// wirte

scala> val sqlContext = new org.apache.spark.sql.SQLContext(sc)

scala> val emps = sqlContext.read.json("employees.json")

scala> emps.write.parquet("employees.parquet")

// read

scala> val empsParqfile = sqlContext.read.parquet("employees.parquet")

empsParqfile: org.apache.spark.sql.DataFrame = [name: string, salary: bigint]

scala> empsParqfile.registerTempTable("emps")

scala> val empsRecs = sqlContext.sql("SELECT * FROM emps")

scala> empsRecs.show()

+-------+------+

| name|salary|

+-------+------+

|Michael| 3000|

| Andy| 4500|

| Justin| 3500|

| Berta| 4000|

+-------+------+